Introduction to Artificial Intelligence

Posted: October 13, 2017 | Updated: December 9, 2022

Posted In: Articles

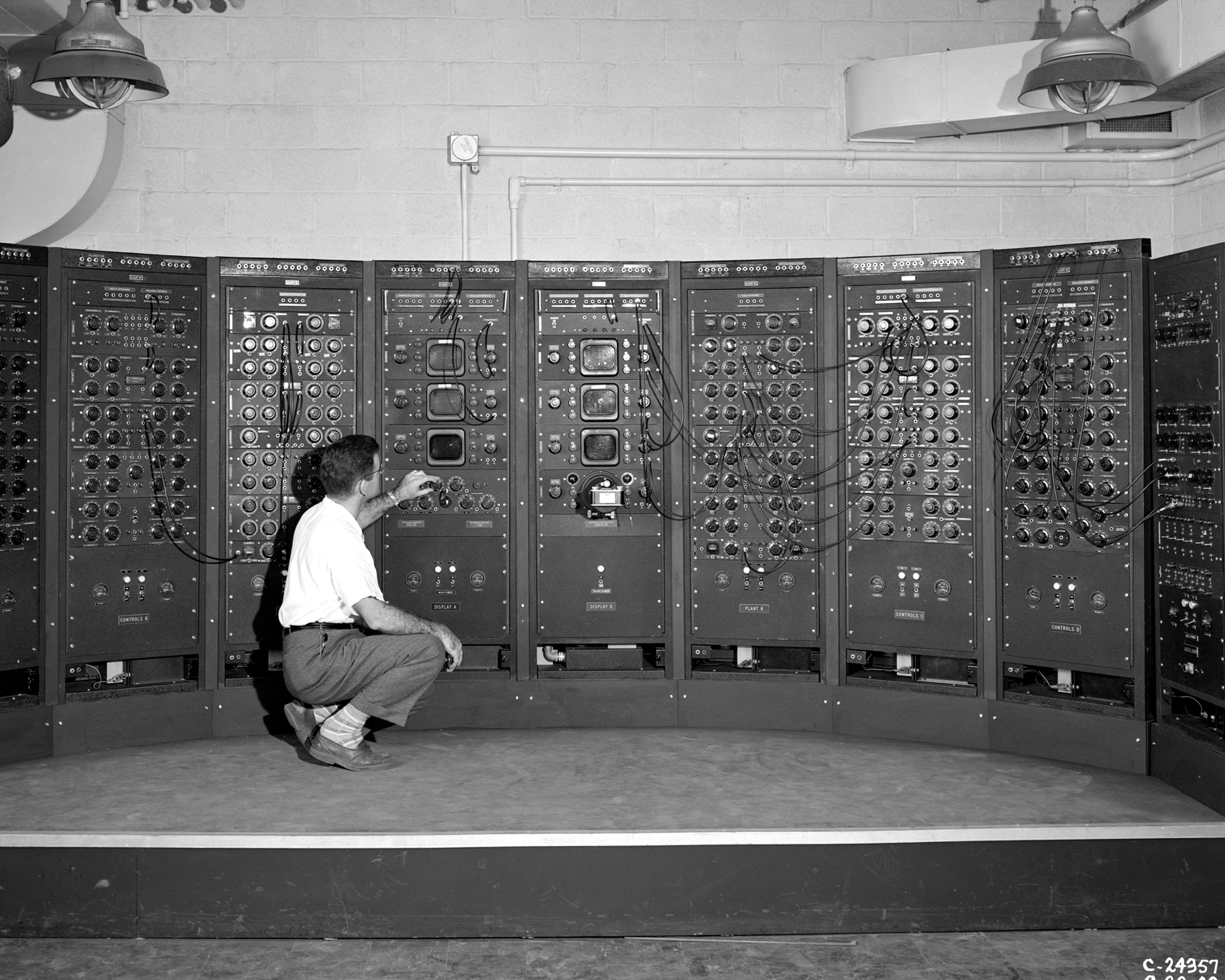

Analog Computing Machine in the Fuel Systems Building. This is an early version of the modern computer. The device is located in the Engine Research Building at the Lewis Flight Propulsion Laboratory, now John H. Glenn Research Center, Cleveland Ohio.

Analog Computing Machine in the Fuel Systems Building. This is an early version of the modern computer. The device is located in the Engine Research Building at the Lewis Flight Propulsion Laboratory, now John H. Glenn Research Center, Cleveland Ohio.

The first utterance of “artificial intelligence,” as most can tell, came in 1955 by John McCarthy at Dartmouth Conference. That same year, Carnegie Mellon engineers Allen Newell, J.C. Shaw, and Herbert Simon demonstrated the first running AI program, Logic Theorist. By 1964, Dr. Danny Bobrow proved a computer’s ability to understand natural language and solve algebra word problems and in 1965 Joseph Weizenbaum debuted ELIZA, an AI program that carries on conversations in the English Language on any topic.

Steadily throughout the following decades advancements in machine learning, natural language processing progressed and began to reveal real-world applications. Robots, autonomous vehicles, hierarchical planning programs–autonomous drawing programs–were showcased at AI conferences and published in scientific journals.

In 1981 the Japanese government committed $850 million to develop AI-powered computers, but it wasn’t until the 1990s that AI as we now know it began to really form. Richard Wallace introduced the A.L.I.C.E. in 1995, which far surpassed ELIZA’s capabilities thanks to the immense natural language sample data enabled by the internet.

By 2000, AI can be seen entering the consumer marketplace with Honda’s loveable ASIMO robot waiter.

In many ways, language and the desire to understand how and why we communicate the way we do is at the heart of AI development. Almost every AI advancement throughout the twentieth century was the result of a mathematical approach to linguistic analysis. And now, with the boisterous, emotional, chaotic, and data-rich digital environment we live in, AI is growing and learning at steady clip.

Artificial intelligence is different from human intelligence. Humans can learn incredibly quickly. Right now, most AI takes about 10x as long to learn something as it does for a human. The increased work with deep-learning neural networks by groups like Google is closing the gap, though.

Once it learns a skill, it completes tasks with more speed and accuracy than humans. AI excels when it’s trained to solve a specific problem. It is providing us the ability to solve huge problems or analyze vast sets of data more quickly, so we can tackle bigger and better things every day.

A.I. Pillars

Natural Language Processing

How can a machine understand words?

The prime directive of Natural language Processing is to understand language and what it means. So, beyond just the definition of a word, but the context of use; what the pace indicates about a person’s anxiety levels, and more. StoryFit technology implements natural language processing to read and understand stories with the ultimate goal of figuring what makes them resonate–or enflame, or bore–consumers. It uses NLP to infer the feeling that different linguistic styles, circumstances, and diction evoke in readers.

A component of NLP called sentiment analysis also comes into play. Using sentiment analysis, StoryFit is able to understand the emotional arc of each book. The text is analyzed and each part of the text is categorized positive–happy statements–or negative–sad/angry statements. Within each language, words can be determined positive (elated, kiss, jump), negative (smash, kill, cry), or neutral (the, a, road). Take these altogether and graph the results, and you can see the emotional arc of a text mapped out in a physical form, called a sentiment map.

Predictive Analytics

How do predictive analytics work and why should they be trusted?

Predictive Analytics is like super-powered betting. This is what the movie Moneyball was about: using machine learning, statistical algorithms, and data to make bets on future outcomes based on historical data. Predictive Analytics essentially is making an incredibly educated guess about what the future holds.

In terms of trust:yes, AI can be trusted. Actually they already are, every day. The weather reports are instances of predictive analytics. How much you trust the prediction should be directly tied to the quality of the training data. This is why online polls that are self-selecting and can be taken over and over, aren’t as predictive as statistically diverse in-person polls with carefully targeted and crafted questions.

Machine Learning

How does a computer learn?

One approach to AI that we use is called “machine learning.” In a nutshell, machine learning “lets computers learn without explicit programming. In analysis, the technology uses algorithms that learn from the data, and, in turn, grow and change when exposed to new information, ultimately uncovering those all-important insights.” [Google]

Machine Learning AI is designed to mimic the human brain’s processing ability and logical trees that help us interpret the world every second. Our brains are CPUS that take input through our senses and, from experience, make logical conclusions about what we are encountering. Then, based on internal knowledge or feeling, we determine the importance of the input. AI does the same thing, usually applied to a highly specific task and perform it more often, more quickly, and more accurately than humans. Machine Learning does the same thing by ingesting gobs and gobs of data and, given specific parameters, determines similarities or differences of the data to draw conclusions. It studies and learns the environment of the data at hand.

StoryFit A.I.

StoryFit is single-mindedly focused on applying the latest AI technology to the entertainment industry. We’re passionate about the intersection of technology and media and dedicated to supporting the process of storytelling.

As so much of the history of artificial intelligence makes clear, humans are driven by a desire not just to communicate, but to understand the nuances and meaning of that communication beyond just the words on the page.

Beginning with asking kindergartners to draw themselves with their parents at home, we have to be taught narrative form as children to help us make order of the world around us. DerivingTo derive meaning from apparently meaningless occurrences and assign value to relationships all comes back to story: a universal logic system. Inciting action, climax, falling action, resolution. Cause and effect. Consequence and reward. Villains and threats, heroes and allies. All of these significant and community-forming concepts are conveyed through story form. So understanding stories is one of the most resonant way to understand human desire, development, and potential.

We see this fact reflected in our books and movies. We don’t sit down to enjoy textbooks or manuals, and we don’t recline on a sunday afternoon in front of the television to watch training videos and sexual harassment protocols. Arguably, all would be more materially productive, but they don’t satisfy that basic human need to understand the world through story. To engage in empathy and learn. Stories are the basis of every viable form of media — movies, books, television, video games, RPGS, etc.

By focusing on that core story through machine learning, StoryFit strives to deliver meaningful information about the core story to determine the best way to tell it, delivering insights that will prove useful and meaningful to other projects and give story-tellers the tools to connect meaningfully with their audience.

After all, as computational linguist Inderjeeet Mani said, “computer science isn’t as far removed from the study of literate as you might think.”